- Google translate app picture how to#

- Google translate app picture android#

- Google translate app picture code#

- Google translate app picture download#

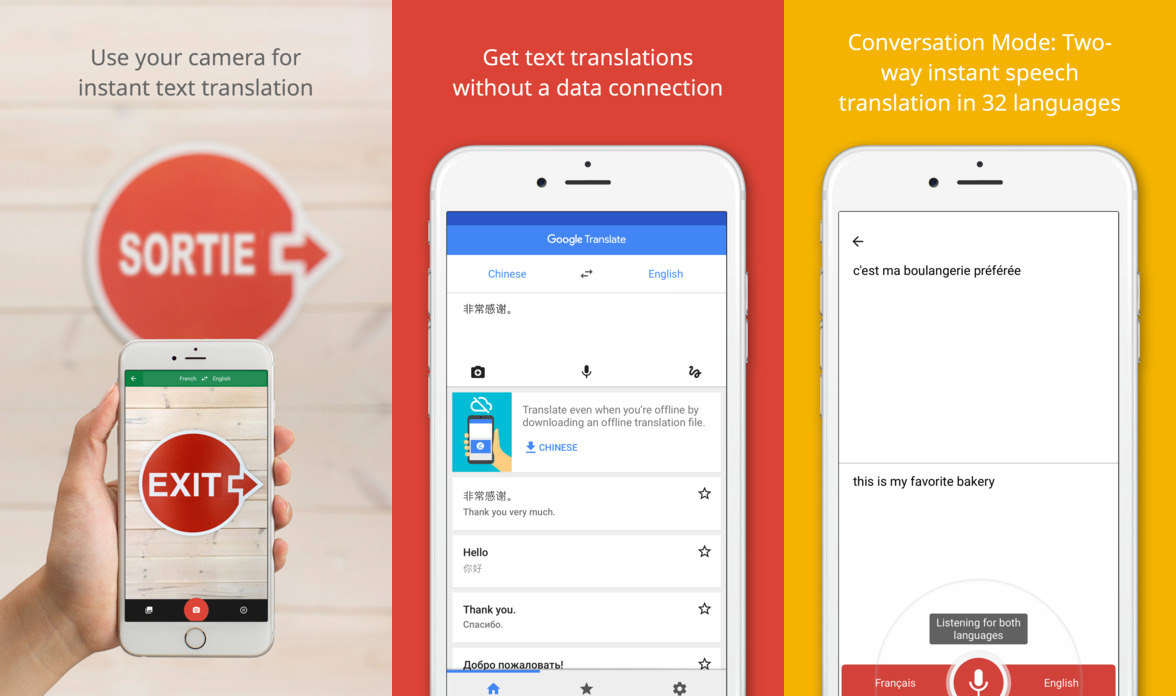

There’s also an option to speak into the phone’s inbuilt microphone. You can also type in English text that will be translated into Japanese (or other languages) and vice versa. Using this app, you point your phone camera at the text you want to read, and the optical character recognition (OCR) technology “reads” the text and displays the translation directly on your phone screen, displacing the original text. There are three main parts to this app: photo, voice, and text translation. In no particular order, these are the apps I tested: 1. These were downloaded from the App Store on an iPhone, though some of these will also be available on Android. I took six translation apps out into the Tokyo wilderness for a spin. For this reason, I didn’t include grammar-focused Japanese-learning apps, ‘phrasebook’ apps, or dictionary apps. The goal was to find useful, intuitive apps that non-Japanese speakers could use to communicate with locals, figure out what’s what, and generally get around. There are quite literally hundreds of translation apps out there.

– image © Florentyna Leow Japanese–English and English–Japanese Translation Apps for iPhones Spoiler: It’s not 100% accurate, but out of all the apps I tested, Photo Translator performed best with handwritten menus.

Google translate app picture android#

Import the starter project into Android Studio. Import project and verify ML Kit and CameraX dependencies

Google translate app picture code#

Google translate app picture download#

Non-relevant concepts and code blocks are already provided and implemented for you.Ĭlick the following link to download all the code for this codelab:

Google translate app picture how to#

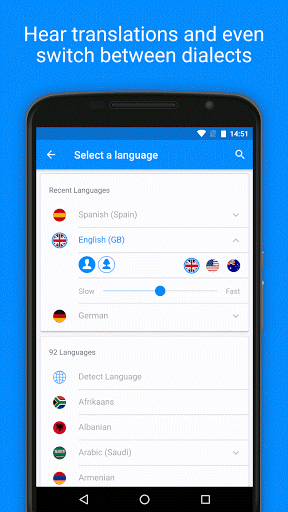

How to use the CameraX library with ML Kit APIs.ML Kit Text Recognition, Language Identification, Translation APIs and their capabilities.How to use the ML Kit SDK to easily add Machine Learning capabilities to any Android app.In the end, you should see something similar to the image below. Lastly, your app will translate this text to any chosen language out of 59 options, using the ML Kit Translation API. It'll use ML Kit Language Identification API to identify language of the recognized text. Your app will use the ML Kit Text Recognition on-device API to recognize text from real-time camera feed. In this codelab, you're going to build an Android app with ML Kit. This codelab will also highlight best practices around using CameraX with ML Kit APIs. This codelab will walk you through simple steps to add Text Recognition, Language Identification and Translation from real-time camera feed into your existing Android app. Whether you need the power of real-time capabilities of Mobile Vision's on-device models, or the flexibility of custom TensorFlow Lite models, ML Kit makes it possible with just a few lines of code. ML Kit makes it easy to apply ML techniques in your apps by bringing Google's ML technologies, such as Mobile Vision, and TensorFlow Lite, together in a single SDK. There's no need to have deep knowledge of neural networks or model optimization to get started. Whether you're new or experienced in machine learning, you can easily implement the functionality you need in just a few lines of code. ML Kit is a mobile SDK that brings Google's machine learning expertise to Android and Android apps in a powerful yet easy-to-use package.

0 kommentar(er)

0 kommentar(er)